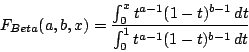

= incompleteBeta(a,b,x)

- (a−1)/(a+b−2) if a > 1 and b > 1

- 0 and 1 if a < 1 and b<1

- 0 if a < 1 and b ≥ 1

- 0 if a=1 and b > 1

- 1 if a ≥ 1 and b < 1

- 1 if a > 1 and b=1

- not defined if a=1 and b=1

http://www.physik.fu-berlin.de/~loison/finance/LOPOR/

Last update: March 2005

©Damien Loison, 2005

The LOPOR library is an efficient library for option pricing and operational risk. It is user-friendly and easy to use in combination with other libraries. For this reason, and contrary to all libraries that I know, no special type of variable is defined. Only types defined in the standard library std are used.

This manual is composed of two distinct parts.

The first part is devoted to present all tools necessary

to solve problems in option pricing and operational risk.

It is not a text book

and only a manual to use with the library.

With these tools you are able to solve any problem in operational

risk. For example see

[Vose2003,Marshall2001,Cruz2002].

The second part is devoted to option

pricing and could be considered as a text book with implementations.

It cannot be considered as exhaustive and is still in expansion.

If you are interested in this part,

I advice you strongly to read the section

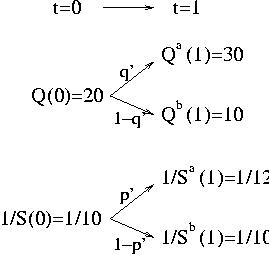

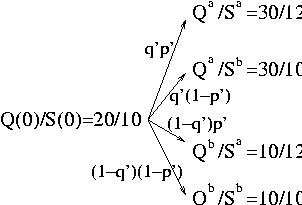

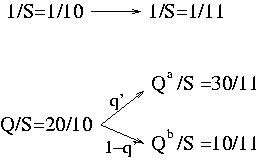

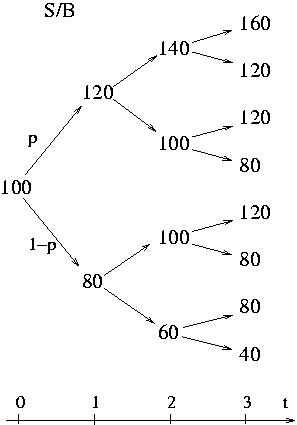

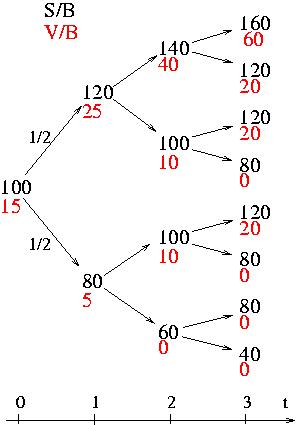

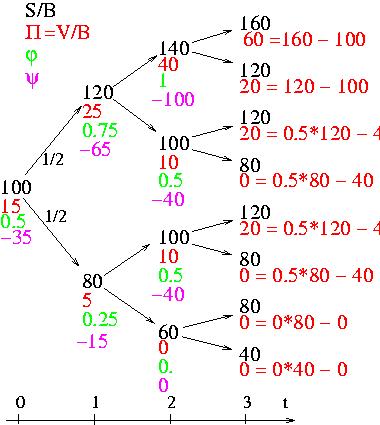

Simple binomial model first.

It present some fundamental points of option pricing,

martingales and risk neutral considerations, through a very simple

example. It i very useful to understand these concepts in this case

before going to more complicated modelization.

This library could have some bugs. If you find one, please send me an email. Moreover if you do not find a function which could be useful for you, or if you do not understand something, please send me an email: Damien.Loison@physik.fu-berlin.de

All the library uses the LOPOR namespace. You have two ways to include the library:

"Our library is carefully made and extremely efficient …, obviously."

The errors are managed through the Error.hpp class. An Error is thrown if there is a problem. The syntax to throw one error is:

#include "Error.hpp"

#include "Global.hpp"

LOPOR::Error("define the error" + LOPOR::c2s(value) + "what you want" );

value can be a double, integer, boolean, etc. We use the function c2s( ) for "convert to string" defined in the class Global.hpp. To catch the Error the program must look like:

// Example Error1.cpp download

//

#include "LOPOR.hpp"

using namespace LOPOR;

int main( )

{

try

{

Exponential exp;

exp.setParameter(-2);

}

catch (const LOPOR::Error& error) { error.information( ); }

return 0;

}

And the output of this program is:

Error: LOPOR::Exponential(-2)::setParameter( ) = > The variable:-2 must be > 0

We can replace error.information( ); by std::cout < < error.value << std::endl;

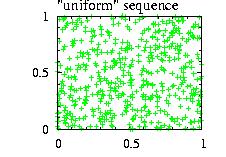

The class Random.hpp returns a random number between 0 and 1. There is no need create an instance of the class. You should use directly the static functions after having included the class with #include "Random.hpp"

| static double Random::ran( ) |

|

return a random number between 0 (included) and 1 (excluded) |

| static double Random::ranZero( ) |

|

return a random number between 0 (excluded) and 1 (excluded) |

| static vector <double> Random::ranVector(int n) |

|

return n random numbers between 0 (included) and 1 (excluded) |

| static void Random::setSeed(vector < int> seed) |

|

seed is a vector with 35 elements. The last two elements should not be zero. Usually used in combination with getSeed( ). |

| static vector < int> Random::getSeed( ) |

|

return a vector < int> with 35 elements. |

It is interesting to observe that some libraries propose to create many instances of the random generator with different seeds for each. This is wrong. The random numbers will not be independent and in particular if the choices of the seeds are in the same serie, there will be a very strong correlation between the different random numbers. If a library proposes this choice you could have some doubts about the reliability of the entire library.

To be able to run two programs successively with non correlated random numbers you should save the seed at the end of the first program using std::vector < int> s_fin=getSeed( ) and then set the seed at the beginning of the second program using setSeed(s_fin).

Following the last two paragraphs we can understand why to propose a function ranSeed( ) where the seed is initialized with the time or something else has no meaning. The computer is not luckier than you. To program a game it could be all right, but not in finance.

In this chapter we provide a way to obtain a random number generator for any distributions. This is in contrast with the majority of libraries which only give random number generators for predefined distributions. In addition to them, we give some classes to modify them (Homotecy, Multiply, Interval, Translate), to Sum them and also two general procedures, HeatBath and Hasting, to simulate any distributions.

The syntaxes of all distributions are alike. They are defined as a child

of the Distribution class defined in the file

"Distribution.hpp".

To use a class you can include the definitions of all

classes by #include "LOPOR.hpp"

or include the header file of the class like:

#include "Exponential.hpp" if we take the

Exponential distribution as example.

First you have to define an instance of the class:

Exponential exp

Then the functions that you can apply to this instance are:

| void setParameters(vector <double> parameters) |

|

define the parameters, for example : E=1/a exp(−x/a) with a=parameters[0]. The type and the number on parameters depends of the distribution. This function is defined in Distribution.hpp and inherited. Be careful of the name difference: this function is with "s" at the end, contrary to the next function. |

| void setParameter(double a, double b) |

|

define the parameters, for example : E=1/a exp(−x/a). The type and the number of parameters depends on the distribution. This function is not defined in Distribution.hpp and not inherited. The previous function is inherited. Be careful of the name difference: this one is without "s", the next with "s" at the end. |

| vector <double> get_Parameters( ) |

|

return a vector with all parameters of the distribution. |

| double ran( ) |

|

return a random number following the distribution. |

| vector <double> ranVector(int n) |

|

return n random numbers following the distribution. |

| vector <double> ranVectorLH(int n) |

|

return n random numbers following the distribution using the Latin Hypercube sampling. Give a better result than ranVector(n) but you must be cautious when using it: all the random numbers must be used to calculate the integrals. |

| double density(double x) |

|

return the density, called also the probability density or mass function. |

| vector <double> densityVector(vector<double > vec_x) |

|

return a vector with the density for each element of vec_x. |

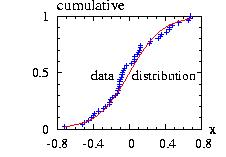

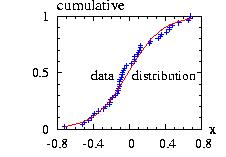

| double cumulative(double x) |

|

return the cumulative distribution function F(x). F(x) varies from between 0 to 1. |

| vector <double> cumulativeVector(vector<double > vec_x) |

|

return a vector with the cumulative for each element of vec_x. |

| double mean( ) |

|

return the average. |

| double mode( ) |

|

return the mode. |

| double variance( ) |

|

return the variance. |

| double sigma( ) |

|

return the standard deviation = sqrt(variance( )) |

| double ran_fc(double y) |

|

return the inverse of the cumulative function

F−1(y) when it is known, with y between 0 and 1.

This function can be used to construct the function

ran( ): |

| std::string information( ) |

|

return information about the distribution. |

| vector <double> fit_keep |

|

This vector is used for the fit using the non linear functions LeastSquares_LM_cum( ) and LeastSquares_LM_den. Keep some parameters constant during the Fit. For example fit_keep={1,4} will keep the parameter number 1 (the second, the count begins at 0) and the number 4 (the fifth) constant. See Fit_LeastSquares_LM_cum2.cpp for an example. |

| vector <double> get_fit_keep_dist( ) |

|

return a vector with the constant parameters for the fit. For usual distribution return fit_keep. However if the distribution is constructed calling another distribution(s) like the class Translate, it is the sum of fit_keep of the distribution itself and the one from the called distribution. See an example in Fit_LeastSquares_LM_cum2.cpp |

| vector <double> get_fit_keep_cum_LM( ) |

|

return a vector with the constant parameters for the fit when using Fit_LeastSquares_LM_cum. It is implemented for each distribution. For example the vector {0,2} means that the first and third parameters will be kept constant during the fit. |

| vector <double> get_fit_keep_den_LM( ) |

|

return a vector with the constant parameters for the fit when using Fit_LeastSquares_LM_den. It is implemented for each distribution. For example the vector {0,2} means that the first and third parameters will be kept constant during the fit. |

An error is thrown if the function called does not exist.

Example of program:

// LOPOR.hpp include all the headers of the LOPOR library

#include "LOPOR.hpp"

using namespace LOPOR;

int main( )

{

try

{

// create an instance

Exponential dist ;

// define the parameter a=2.

dist.setParameter(2.);

// another possibility: create a vector

dist.setParameters(c2v(2.));

// create the vector {0,1,2,3,…,9}

std::vector <double> vecX(vec_create(10,0.,1.));

// {f(0),f(1),…,f(9)}: f(x)=0.5 exp(-x/2)

std::vector <double> vecY(dist.densityVector(vecX));

// create a vector with 1000 random numbers

std::vector <double> ranE(dist.ranVector(1000));

}

catch (const LOPOR::Error& error) { error.information( ); }

return 0;

}

The information given in this chapter comes mainly from [Johnson1994a] and [Evans2000].

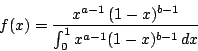

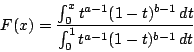

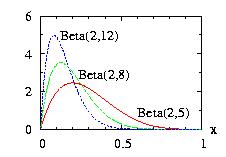

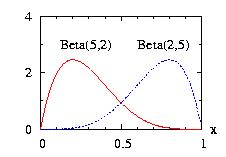

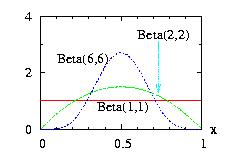

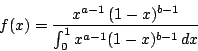

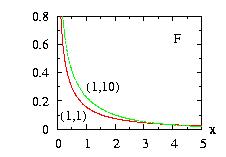

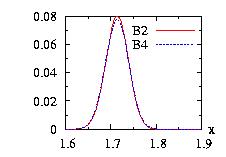

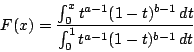

| class: | Beta.hpp |

| density: |

|

| restrictions: | a > 0, b > 0 |

| domain: | 0 ≤ x ≤ 1 |

| cumulative: |

= incompleteBeta(a,b,x) |

| mean: | a/(a+b) |

| mode: |

|

| variance: | a.b.(a+b)−2.(a+b+1)−1 |

In addition to the general syntax, we have:

| void setParameter(double a, double b) | a > 0, b > 0 |

|

vector <double> E.ranVectorLH(int n); double E.ran_fc(double y); |

not defined an Error is thrown when called. |

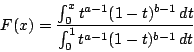

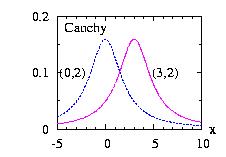

| class: | Cauchy.hpp |

| density: | f(x) = ( b2+(x−a)2 )−1/(π b) |

| restrictions: | b > 0 |

| domain: | −∞ < x < +∞ |

| cumulative: | F(x) = 0.5 + π−1 tan−1 ( (x−a) b−1 ) |

| mean: | not defined |

| mode: | a |

| variance: | not defined |

In addition to the general syntax, we have:

| void setParameter(double a, double b) | b positive. |

|

double mean( ) double variance( ) double sigma( ) |

not defined an Error is thrown when called. |

| vector <double> Moments(Distribution* dist,vector <double> vecX) |

not defined an Error is thrown when called. |

All the other fit functions described in Fit are accessible.

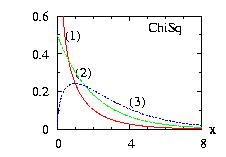

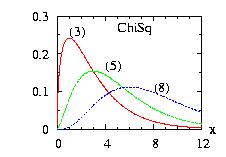

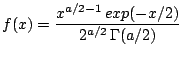

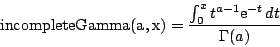

| class: | ChiSq.hpp |

| density: |

|

| restrictions: | a > 0 |

| domain: | x > 0; |

| cumulative: | incompleteGamma(a/2,x/2) |

| mean: | a |

| mode: |

|

| variance: | 2 a |

In addition to the general syntax, we have:

| void setParameter(double a, double b) | a > 0 |

|

vector <double> E.ranVectorLH(int n); double E.ran_fc(double y); |

not defined an Error is thrown when called. |

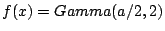

| class: | Exponential.hpp |

| density: | f(x) = a-1 exp(− x/a) |

| restrictions: |

a > 0 |

| domain: | x > 0 |

| cumulative: | F(x) = 1 − exp(−x/a) |

| mean: | a |

| mode: | 0 |

| variance: | a2 |

In addition to the general syntax, we have:

| void setParameter(double a) |

a > 0 |

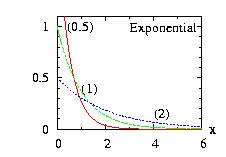

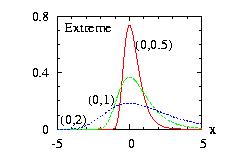

| class: |

Extreme.hpp Known also as Gumbel distribution |

| density: | f(x) = b−1 exp[ −(x−a)/b − exp(−(x−a)/b) ] |

| restrictions: | b > 0 |

| domain: | −∞ < x < +∞ |

| cumulative: | F(x) = exp[ − exp(−(x−a)/b) ] |

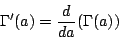

| mean: | a − b Γ'(1) |

| mode: | a |

| variance: | b2 π2/6 |

In addition to the general syntax, we have:

| void setParameter(double a, double b) | b > 0. |

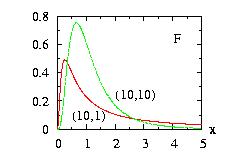

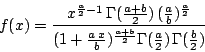

| class: | F.hpp |

| density: |

|

| restrictions: |

a > 0 b > 0 |

| domain: | 0 < x < +∞ |

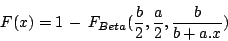

| cumulative: |

|

| mean: | b/(b − 2) if b > 2 |

| mode: | b/a . (a − 2)/(b + 2) if a > 2 |

| variance: | 2 b2 (a + b − 2)/ [ a (b − 2)2 (b − 4) ] if b > 4 |

In addition to the general syntax, we have:

| void setParameter(double a, double b) |

a > 0. b > 0. |

|

vector <double> E.ranVectorLH(int n); double E.ran_fc(double y); |

not defined an Error is thrown when called. |

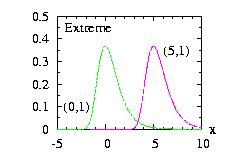

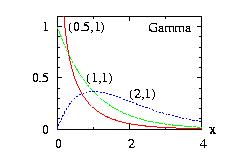

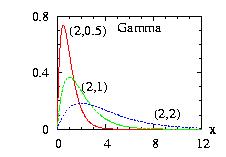

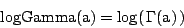

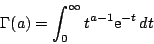

| class: | Gamma.hpp |

| density: | xa−1 exp(−x/b) /( Γ(a) ba ) |

| restrictions: | a > 0 and b > 0 |

| domain: | 0 ≤ x |

| cumulative: | incompleteGamma(a,x/b) |

| mean: | a b |

| mode: |

|

| variance: | a b2 |

In addition to the general syntax, we have:

| void setParameter(double a, double b) | a and b positive. |

|

vector <double> E.ranVectorLH(int n); double E.ran_fc(double y); |

not defined an Error is thrown when called. |

The x coordinates are not necessary equidistant. However in

this case the calls to the functions density(x) and cumulative(x) are slower.

We use the Walker class to calculate

the properties of this class.

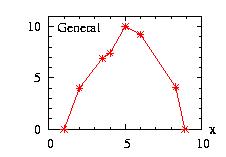

| class: | General.hpp |

| density: |

f(x) = pi +

(pi+1−pi)

(x−xi) / (xi+1−xi) i is an integer from 0 to n−1 |

| restrictions: |

n ≥ 1 pi ≥ 0 and at least one pi ≠ 0; p has n components xi < xi+1; x has n components |

| domain: | x0 ≤ x ≤ xn−1 |

| cumulative: | |

| mean: | |

| mode: | no closed form |

| variance: |

In addition to the general syntax, we have:

|

void setParameter ( vector <double> x, vector <double> p) |

x and p have n components. See restrictions above. |

| int get_i ( double x ) | return the number of the interval (0 to n−1) corresponding to the value x. |

|

vector <double> E.ranVectorLH(int n); double E.ran_fc(double y); |

not defined an Error is thrown when called. |

Fit No fit functions described in Fit are accessible.

The x coordinates are not necessary equidistant. However in

this case the calls to the functions density(x) and cumulative(x) are slower.

We use the Walker class to calculate

the properties of this class.

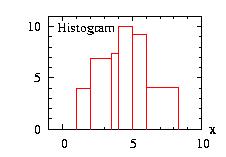

| class: | Histogram.hpp |

| density: | f(x) = pi if xi ≤ x < xi+1 i is an integer from 0 to n−1 |

| restrictions: |

n ≥ 2 pi ≥ 0 and at least one pi ≠ 0, there are n−1 probabilities pi xi < xi+1, there are n values xi |

| domain: | x0 ≤ x ≤ xn−1 |

| cumulative: | |

| mean: | |

| mode: | no closed form |

| variance: |

In addition to the general syntax, we have:

|

void setParameter ( vector <double> x, vector <double> p) |

x and p have n components and n−1 components, respectively. See restrictions above. |

| int get_i ( double x ) | return the number of the interval (0 to n−1) corresponding to the value x. |

|

vector <double> E.ranVectorLH(int n); double E.ran_fc(double y); |

not defined an Error is thrown when called. |

Fit

No fit functions described in Fit are accessible.

A related class is the StepFunction class.

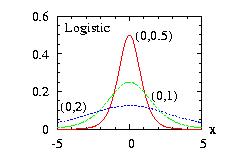

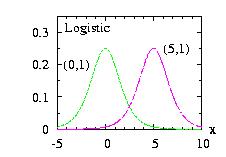

| class: | Logistic.hpp |

| density: |

f(x) = z b−1 (1 + z)−2 with z = exp[ − (x − a) / b ] |

| restrictions: | b > 0 |

| domain: | −∞ < x < +∞ |

| cumulative: | F(x) = ( 1 + z )−1 |

| mean: | a |

| mode: | a |

| variance: | b2 π2 / 3 |

In addition to the general syntax, we have:

| void setParameter(double a, double b) | b > 0. |

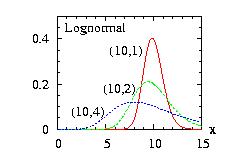

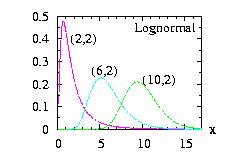

| class: | Lognormal.hpp |

| density: |

f(x) = x−1

( 2 π σ2 )−1/2

exp[

− ( log( x ) − μ )2 / ( 2

σ2 )

] μ = log [ a2 / ( b2 + a2 )1/2 ] σ = [ log( (b2 + a2 ) / a2 ) ]1/2 |

| restrictions: | a > 0 and b > 0 |

| domain: | 0 ≤ x |

| cumulative: | Normalcumulative( (log(x)-μ)/σ ) |

| mean: | a |

| mode: | exp( μ − σ2 ) |

| variance: | b2 |

In addition to the general syntax, we have:

| void setParameter(double a, double b) | a > 0 and b > 0 |

|

vector <double>

E.ranVectorLH(int n); double E.ran_fc(double y); double E.cumulative(double x); |

not defined an Error is thrown when called. |

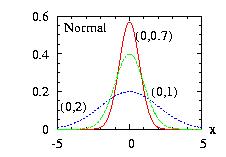

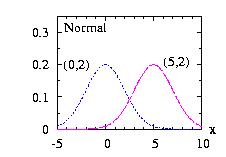

| class: | Normal.hpp |

| density: | f(x) = ( 2 π σ2 )−1/2 exp[ − (x − μ)2 / ( 2 σ2 ) ] |

| restrictions: | σ > 0 |

| domain: | −∞ < x < +∞ |

| cumulative : | 0.5+0.5*incompleteGamma( 0.5 , (x − μ)2 / (2 σ2) ) * sign(x − μ) |

| mean: | μ |

| mode: | μ |

| variance: | σ2 |

In addition to the general syntax, we have:

| void setParameter(double μ, double σ) |

|

μ > 0 |

|

static double

static_ran(double mean=0, double var=1); |

|

Static function. Return a random number from a normal distribution with the mean and the variance given as parameter. |

| static vector <double> static_ranVector(int n); |

|

Static function. Return n random numbers following the Normal distribution. |

|

static double

static_density(double x, double mean=0, double var=1); |

|

Static function. Return the density of a normal distribution with the mean and the variance given as parameter. |

|

static double

static_cumulative(double x, double mean=0, double var=1); |

|

Static function. Return the cumulative of a normal distribution with the mean and the variance given as parameter. |

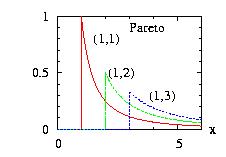

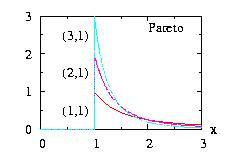

| class: | Pareto.hpp |

| density: | f(x) = θ aθ x−θ−1 |

| restrictions: | θ > 0 and a > 0 |

| domain: | a ≤ x |

| cumulative: | F(x) = 1 − (a/x)θ |

| mean: | a θ / (θ − 1) |

| mode: | a |

| variance: | a2 θ (θ −1)−2 (θ −2)−1 |

In addition to the general syntax, we have:

| void setParameter(double θ, double a) | θ > 0 and a > 0 |

| vector <double> Moments(Distribution* dist,vector <double> vecX) |

not defined an Error is thrown when called. |

All the other fit functions described in Fit are accessible.

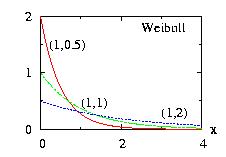

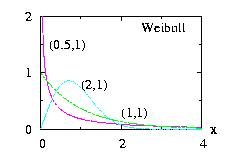

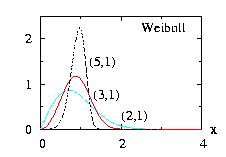

The Rayleigh is the Weibull distribution with a = 2.

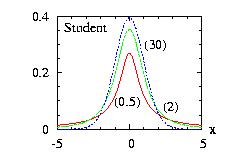

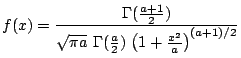

| class: | Student.hpp |

| density: |

|

| restrictions: | a > 0 |

| domain: | −∞ < x < +∞ |

| cumulative: |

0.5+0.5*( incompleteBeta(a/2,0.5,1)−

incompleteBeta(a/2,0.5,a/(a+x*x)) )*sign(x) |

| mean: | 0 if a > 1 |

| mode: | 0 |

| variance: | a / (a − 2) |

In addition to the general syntax, we have:

| void setParameter(double a) | a positive. |

|

vector <double> E.ranVectorLH(int n); double E.ran_fc(double y); double E.cumulative(double x); |

not defined an Error is thrown when called. |

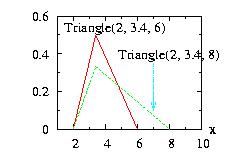

It is similar to the General

class with 2 sections.

| class: | Triangle.hpp |

| density: |

f(x) = 2 (x − a)

(b − a)−1

(c − a)−1

if a ≤ x ≤ b f(x) = 2 (c − x) (c − a)−1 (c − b)−1 if b < x ≤ c |

| restrictions: | a ≤ b ≤ c and a < c |

| domain: | a ≤ x ≤ c |

| cumulative: |

F(x) = 0 if x < a F(x) = (x − a)2 (b − a)−1 (c − a)−1 if a ≤ x ≤ b F(x) = 1 − (c − x)2 (c − a)−1 (c − b)−1 if b < x ≤ c F(x) = 1 if c < x |

| mean: | (a + b + c)/3 |

| mode: | b |

| variance: | (a2 + b2 + c2 − a b − a c − b c)/18 |

In addition to the general syntax, we have:

| void setParameter(double a, double b, double c) | a ≤ b ≤ c and a < c |

Fit No fit functions described in Fit are accessible.

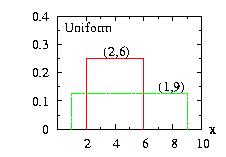

| class: | Uniform.hpp |

| density: | f(x)= 1/(b − a) if a ≤ x ≤ b |

| restrictions: | a < b |

| domain: | a ≤ x ≤ b |

| cumulative: |

F(x) = 0 if x < a F(x) = (x − a) / (b − a) if a ≤ x ≤ b F(x) = 1 if b < x |

| mean: | (a + b)/2 |

| mode: | not defined |

| variance: | (b − a)2 / 12 |

In addition to the general syntax, we have:

| void setParameter(double a, double b) | a ≤ b |

|

double mode( ); |

not defined an Error is thrown when called. |

Fit No fit functions described in Fit are accessible.

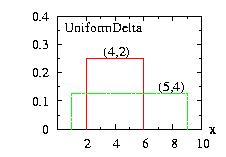

| class: | UniformDelta.hpp |

| density: | f(x)= 1/(2 δ ) if xi − δ ≤ x ≤ xi + δ |

| restrictions: | |

| domain: | xi − δ ≤ x ≤ xi + δ |

| cumulative: |

F(x) = 0 if x < xi − δ F(x) = (x − xi + δ) / (2 δ) if xi − δ ≤ x ≤ xi + δ F(x) = 1 if x > xi + δ |

| mean: | xi |

| mode: | not defined |

| variance: | δ2 / 3 |

In addition to the general syntax, we have:

| void setParameter(double xi, double δ) | |

| void setParameter(double xi) | The parameter δ keeps its value. If δ not already defined, δ=1 automatically. |

| void ran_(double xi) | identical to ran() but the xi is updated before the call of ran() |

|

double mode( ); |

not defined an Error is thrown when called. |

This class should not be used with the Interval class.

Never program something like that:

//WRONG UniformDelta uniDel; uniDel.setParameter(0,1); Interval interval; interval.setParameter(&uniDel,0,10,2);It will not work, the xi in uniDel will not be updated.

| class: | Weibull.hpp |

| density: | f(x)= a b−a xa−1 exp( −(x/b)a ) |

| restrictions: | a > 0 and b > 0 |

| domain: | x > 0 |

| cumulative: | F(x)= 1 − exp( −(x/b)a ) |

| mean: | Γ(1/a) b/a |

| mode: | b (1 − 1/a)1/a |

| variance: | [ 2 Γ(2/a) − Γ(1/a)2 /a ] b2/a |

In addition to the general syntax, we have:

| void setParameter(double a, double b) | a > 0 and b > 0 |

Information given in this chapter comes mainly from [Johnson1994b] and [Evans2000].

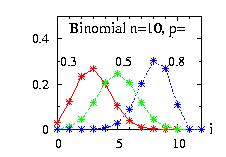

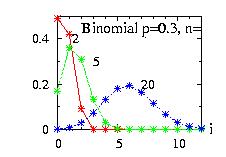

| class: | Binomial.hpp |

| density: |

|

| restrictions: | 0 < p < 1 and n={0,1,2,…} |

| domain: | x ∈ {0,1,2,…,n} |

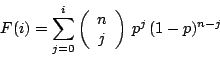

| cumulative: |

|

| mean: | n p |

| mode: |

|

| variance: | n p (1 − p) |

In addition to the general syntax, we have:

| void setParameter(int n, double p) | 0 < p < 1 and n={0,1,2,…} |

|

vector <double> E.ranVectorLH(int n); double E.ran_fc(double y); |

not defined an Error is thrown when called. |

Fit

All fit functions described in Fit are accessible.

Moreover the fit_keep is initialized with the constraint

that the

first parameter of the class, n, is kept const when using

LeastSquares_LM_cum

and LeastSquares_LM_den.

The ran( ) function is on the form double. You

should use the function LOPOR::

c2floor( ) provided in the

class Global to get the integer.

Similarly with the ranVector( )

function

you should use the function vec_c2floor( ) provided in the class

Vector.

Example of program:

// Example Binomial1.cpp download

//

#include "LOPOR.hpp"

using namespace LOPOR;

int main( )

{

try

{

Binomial bino;

bino.setParameter(10,0.2);

// print 1 random number

print("bino.ran( )=",c2floor(bino.ran( )));

// print 10 random numbers

vec_print(vec_c2floor(bino.ranVector(10)),"results ");

}

catch (const LOPOR::Error& error) { error.information( ); }

return 0;

}

The output of this program is:

bino.ran( )= 1

# i= results

0 3

1 3

2 2

3 3

4 1

5 2

6 4

7 1

8 1

9 4

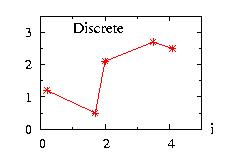

| class: | Discrete.hpp |

| density: |

f(xi) = pi integer i from 0 to n−1 |

| restrictions: |

n ≥ 1 pi ≥ 0 and at least one pi ≠ 0, there are n probabilities pi xi < xi+1, there are n values xi |

| domain: | x ∈ {x0,x1,…,xn} |

| cumulative: | F(xi) = p0 + p1 + … + pi |

| mean: | ( p0 x0 + p1 x1 + … pn−1 xn−1 ) / n |

| mode: | |

| variance: | ( p0 (x0 − mean)2 + p1 (x1 − mean)2 + … + pn−1 (xn−1 − mean)2 )/ n |

In addition to the general syntax, we have:

| void setParameter(vector <int> x, vector <double> double p) | x and p have n elements. |

| void setParameter(vector <int> x) | x has n elements. All the {pi} are equal: pi=1/n; |

|

double ran_fc(double y); |

not defined an Error is thrown when called. |

Fit

No fit functions described in Fit are accessible.

The class uses the Walker

procedure.

The ran( ) function is on the form double. You

should use the function LOPOR::c2floor( ) to get the integer.

Similarly with the ranVector( )

function

you should use the function vec_c2floor( ) provided in the class

Vector.

Example of program:

// Example Discrete1.cpp download

//

#include "LOPOR.hpp"

using namespace LOPOR;

int main( )

{

try

{

Discrete disc;

disc.setParameter(c2v<double>(0.2,1.7,2.0,3.5,4.1),

c2v<double>(1.2,0.5,2.1,2.7,2.5));

// print 1 random number

print("disc.ran( )=",disc.ran( ));

// print 10 random numbers

vec_print(disc.ranVector(10),"results ");

}

catch (const LOPOR::Error& error) { error.information( ); }

return 0;

}

The output of the program is:

disc.ran( )= 4.1

# i= results

0 0.2

1 1.7

2 4.1

3 2

4 2

5 4.1

6 3.5

7 3.5

8 2

9 0.2

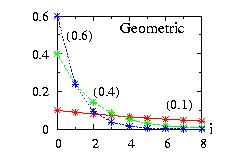

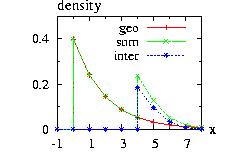

| class: | Geometric.hpp |

| density: |

f(i) = p (1 − p)i |

| restrictions: | 0 < p ≤ 1 |

| domain: | integer i ≥ 0 |

| cumulative: | F(i) = 1 − (1 − p)i+1 |

| mean: | (1 − p) / p |

| mode: | 0 |

| variance: | (1 − p) / p2 |

In addition to the general syntax, we have:

| void setParameter(double p) | 0 < p ≤ 1 |

Fit

All fit functions described in Fit are accessible.

Moreover the fit_keep is initialized

with the constraint that the

first parameter of the class, n, is kept const when using

LeastSquares_LM_cum

and LeastSquares_LM_den.

The ran( ) function is on the form double. You

should use the function LOPOR::c2floor( ) to get the integer.

Similarly with the ranVector( )

function

you should use the function vec_c2floor( ) provided in the class

Vector.

Example of program:

// Example Geometric1.cpp download

//

#include "LOPOR.hpp"

using namespace LOPOR;

int main( )

{

try

{

Geometric geo;

geo.setParameter(0.3);

// print 1 random number

print("geo.ran( )=",c2floor(geo.ran( )));

// print 10 random numbers

vec_print(vec_c2floor(geo.ranVector(10)),"results ");

}

catch (const LOPOR::Error& error) { error.information( ); }

return 0;

}

The output of this program is:

geo.ran( )= 6

# i= results

0 0

1 0

2 0

3 1

4 2

5 1

6 3

7 3

8 1

9 0

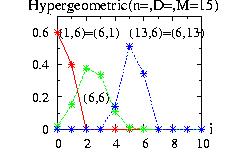

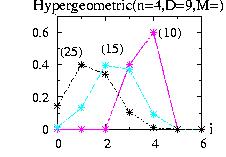

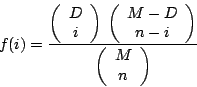

| class: | Hypergeometric.hpp |

| density: |

|

| restrictions: |

0 < n ≤ M 0 < D ≤ M M > 0 D, M, n integer |

| domain: |

integer i ≥ 0 maximum(0,n + D − M) ≤ i ≤ minimum(n,D) |

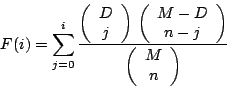

| cumulative: |

|

| mean: | n D / M |

| mode: | no closed form |

| variance: | D (M − D) n /M2 |

In addition to the general syntax, we have:

| void setParameter(int n, int D, int M) | see restriction above |

| double mode( ) | test all i, can be time consuming |

|

vector <double> E.ranVectorLH(int n); double E.ran_fc(double y); |

not defined an Error is thrown when called. |

Fit

No fit functions described in Fit are accessible.

The ran( ) function is on the form double. You

should use the function LOPOR::c2floor( ) to get the integer.

Similarly with the ranVector( )

function

you should use the function vec_c2floor( ) provided in the class

Vector.

Example of program: see Geometric1.cpp.

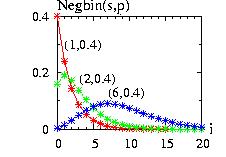

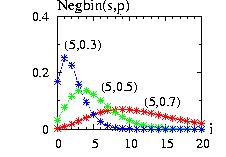

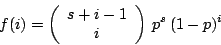

| class: | Negbin.hpp |

| density: |

|

| restrictions: |

integer s > 0 0 < p ≤ 1 |

| domain: |

integer i ≥ 0 |

| cumulative: |

|

| mean: | s (1 − p) / p |

| mode: |

z and z+1 if z is an integer (int)(z+1) otherwise z=( s (1 − p) − 1 ) / p |

| variance: | s (1 − p) / p2 |

Note: for s=1 the negative binomial distribution is equivalent to the

geometric distribution:

Negbin (1,p)=Geometric(p)

In addition to the general syntax, we have:

| void setParameter(int s, double p) | see restriction above |

|

vector <double> E.ranVectorLH(int n); double E.ran_fc(double y); |

not defined an Error is thrown when called. |

Fit

All fit functions described in Fit are accessible.

Moreover the fit_keep is initialized that the

first parameter of the class, s, is kept const when using

LeastSquares_LM_cum

and LeastSquares_LM_den.

The ran( ) function is on the form double. You

should use the function LOPOR::c2floor( ) to get the integer.

Similarly with the ranVector( )

function

you should use the function vec_c2floor( ) provided in the class

Vector.

Example of program: see Geometric1.cpp.

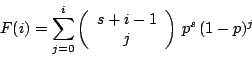

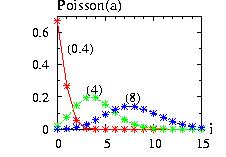

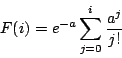

| class: | Poisson.hpp |

| density: |

|

| restrictions: | a > 0 |

| domain: |

integer i ≥ 0 |

| cumulative: |

|

| mean: | a |

| mode: |

a, a − 1 if a is an integer (int)(a) otherwise |

| variance: | a |

In addition to the general syntax, we have:

| void setParameter(double a) | a > 0 |

|

vector <double> E.ranVectorLH(int n); double E.ran_fc(double y); |

not defined an Error is thrown when called. |

The ran( ) function is on the form double. You

should use the function LOPOR::c2floor( ) to get the integer.

Similarly with the ranVector( )

function

you should use the function vec_c2floor( ) provided in the class

Vector.

Example of program: see Geometric1.cpp.

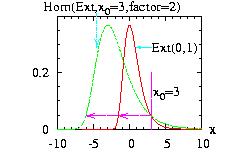

The class Homotecy.hpp allows you to make a homotocy

around a point x0 by a factor that you

give:

The class Homotecy.hpp allows you to make a homotocy

around a point x0 by a factor that you

give:

(x − x0) → (x − x0).factor

then the new instance Homotecy(&distribution,x0,factor)

can be used as an usual distribution.

In addition to the general

syntax, we have:

| void setParameter(Distribution* d, double x0, double factor); |

|

where Distribution* is the address of the distribution to transform. |

| void refresh( ); |

|

if the distribution (Extreme in our example) has changed you should refresh the class. This is not done automatically because it is very time consuming to check it at each call of ran( ) Moreover the call of refresh( ) call the refresh( ) function of the distribution given as parameter. |

Fit

All fit functions described in Fit are accessible.

Moreover the fit_keep is initialized with the constraint

that the

first parameter of the class, x0, is kept const when using

LeastSquares_LM_cum

and LeastSquares_LM_den.

The program to

generate the figure above could be:

// Example Homotecy1.cpp download

//

#include "LOPOR.hpp"

using namespace LOPOR;

int main( )

{

try

{

Extreme Ext;

Ext.setParameter(0,1);

Homotecy Hom;

Hom.setParameter(&Ext,3,2);

// vecX={-10, -9.99, -9.98,…, 9.99, 10}

std::vector<double> vecX(vec_create(2001,-10.,0.01));

// to create the figure above:

// print in file "Homotecy1.res", the vectors:

// i vecX density(Extreme) density(Homotecy)

// 0 -10 0 1.37459e-13

// 1 -9.99 0 1.61336e-13

// 2 -9.98 … ….

vec_print("Homotecy1.res",vecX,Ext.densityVector(vecX),

Hom.densityVector(vecX));

// print 10 random numbers

vec_print(Hom.ranVector(10),"results ");

}

catch (const LOPOR::Error& error) { error.information( ); }

return 0;

}

And the program will create the file "Homotecy1.res" used to plot the figure above and print on the screen:

# i= results

0 1.52601

1 -5.81126

2 -3.90537

3 -3.49595

4 -2.61605

5 -1.9611

6 -3.30339

7 -1.24151

8 -0.72952

9 -2.50452

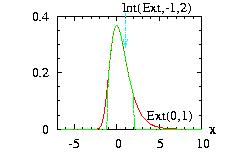

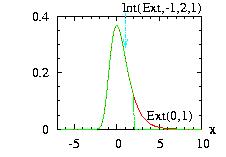

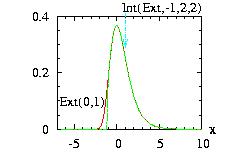

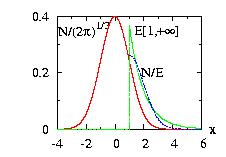

The class Interval.hpp allows you to choose an interval [A,B] where the function is zero outside of it. There are three possible values of the border for Interval(&distribution,A,B,border). We take as example in the figure A=−1, B=+2.

border=0: (by default)

f(x) → f(x) if A ≤ x ≤ B

f(x) → 0 if x < A or B < x

border=1:

f(x) → f(x) if −∞ < x ≤ B

f(x) → 0 if B < x

border=2:

f(x) → f(x) if A ≤ x < −∞

f(x) → 0 if x < A

then the new instance Interval(&distribution,A,B,border)

can be used at an usual distribution.

In addition to the general

syntax, we have:

| void setParameter(Distribution* d, double A, double B, double border=0); |

|

where Distribution* is the address of the distribution to transform. |

double successPerCent( );

|

|

return the percentage of success of the calls for ran( ) and ranVector( ) functions of the new interval instance. These functions can be produced in two ways:

|

| void refresh( ); |

|

if the distribution (Extreme in our example) has changed, you should refresh the class. This is not done automatically because it is very time-consuming to check it at each call of ran( ). Moreover the call of refresh() calls the refresh( ) function of the distribution given as parameter. |

| vector <double> Moments(Distribution* dist,vector <double> vecX) |

|

not defined |

| vector <double> MLE(Distribution* dist,vector <double> vecX) |

|

not defined |

All the other fit functions described in Fit are accessible.

The program to generate the second figure above could be:

// Example Interval1.cpp download

//

#include "LOPOR.hpp"

using namespace LOPOR;

int main( )

{

try

{

Extreme Ext;

Ext.setParameter(0,1);

Interval Int;

Int.setParameter(&Ext,-1,2,1);

// vecX={-7, -6.99, -9.98,…, 9.99, 10}

std::vector<double> vecX(vec_create(1701,-7.,0.01));

// to create the figure above:

// print in file "Interval1.res", the vectors:

// i vecX density(Extreme) density(Interval)

// 0 -7 0 0

// 1 -6.99 0 0

// 2 -6.98 … ….

vec_print("Interval1.res",vecX,Ext.densityVector(vecX),

Int.densityVector(vecX));

// print 10 random numbers

vec_print(Int.ranVector(10),"ran ");

}

catch (const LOPOR::Error& error) { error.information( ); }

return 0;

}

And the program will create the file "Interval1.res" used to plot the figure above and print on the screen:

# i= ran

0 1.42973

1 -1.43828

2 -0.535245

3 -0.348377

4 0.0401327

5 0.31446

6 -0.261702

7 0.597054

8 0.783772

9 0.0878482

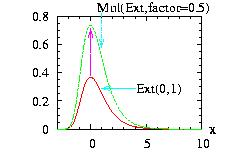

The class Multiply.hpp allows you to multiply the density

function by a positive factor.

The class Multiply.hpp allows you to multiply the density

function by a positive factor.

f(x) → f(x).factor

then the new instance, Multiply(&distribution,factor),

can be used at an usual distribution. This does not change

the way of producing the random number from this

distribution. However it will have an influence when we add

the distribution with the Sum class and

with the HeatBath class.

In addition to the general

syntax, we have:

| void setParameter(Distribution* d, double factor); |

|

where Distribution* is the address of the distribution to transform. |

| void refresh( ); |

|

if the distribution (Extreme in our example) has changed, you should refresh the class. This is not done automatically because it is very time-consuming to check it at each call of ran( ). Moreover the call of refresh() calls the refresh( ) function of the distribution given as parameter. |

Fit

All fit functions described in Fit are accessible.

Moreover the fit_keep vector is initialized with

the constraint that the

last parameter of the class, factor, is kept constant when using

LeastSquares_LM_cum.

The program to generate the figure above could be:

// Example Multiply1.cpp download

//

#include "LOPOR.hpp"

using namespace LOPOR;

int main( )

{

try

{

Extreme Ext;

Ext.setParameter(0,1);

Multiply Mul;

Mul.setParameter(&Ext,2);

// vecX={-3, -2.99, -2.98,…, 9.99, 10}

std::vector<double> vecX(vec_create(1301,-3.,0.01));

// to create the figure above:

// print in file "Multiply1.res", the vectors:

// i vecX density(Extreme) density(Multiply)

// 0 -3 3.80054e-08 7.60109e-08

// 1 -2.99 4.59514e-08 9.19027e-08

// 2 -2.98 … ….

vec_print("Multiply1.res",vecX,Ext.densityVector(vecX),

Mul.densityVector(vecX));

// print 10 random numbers

vec_print(Mul.ranVector(10),"ran ");

}

catch (const LOPOR::Error& error) { error.information( ); }

return 0;

}

And the program will create the file "Multiply1.res" used to plot the figure above and print on the screen:

# i= ran

0 2.26301

1 -1.40563

2 -0.452687

3 -0.247977

4 0.191976

5 0.519449

6 -0.151694

7 0.879247

8 1.13524

9 0.247738

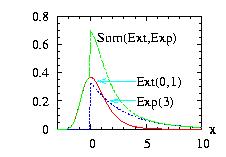

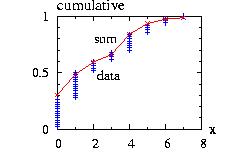

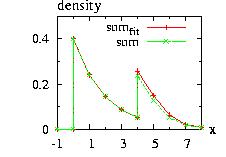

The class Sum.hpp allows you to add several distributions.

The class Sum.hpp allows you to add several distributions.

f1(x), f2(x) … → f1(x) + f2(x) + …

then the new instance, Sum(vector < &distribution > ),

can be used at an usual distribution.

In addition to the general

syntax, we have:

| void setParameter( vector <Distribution*> d); |

|

where d={d1,d2,…} is a vector composed of the addresses of the distributions to add. |

| void refresh( ); |

|

if the distribution (Extreme and Exponential in our example) has changed, you should refresh the class. This is not done automatically because it is very time-consuming to check it at each call of ran( ). Moreover the call of refresh() calls the refresh( ) function of the distributions given as parameter. |

|

vector <double>

Moments(Distribution*

dist,vector <double> vecX)

vector <double> MLE(Distribution* dist,vector <double> vecX) |

|

not defined |

All the other fit functions described in Fit are accessible.

The program to

generate the figure above could be:

// Example Sum1.cpp download

//

#include "LOPOR.hpp"

using namespace LOPOR;

int main( )

{

try

{

Extreme Ext;

Ext.setParameter(0,1);

Exponential Exp;

Exp.setParameter(3.);

Sum sum;

sum.setParameter( c2v (&Ext,&Exp) );

// vecX={-3, -2.99, -2.98,…, 9.99, 10}

std::vector<double> vecX(vec_create (1301,-3.,0.01));

// to create the figure above:

// print in file "Sum1.res", the vectors:

// i vecX dens(Ext) dens(Exp) dens(sum)

// 0 -3 3.80054e-08 0 3.80054e-08

// 1 -2.99 4.59514e-08 0 4.59514e-08

// 2 -2.98 … … …

vec_print ("Sum1.res",vecX,Ext.densityVector (vecX),

Exp.densityVector (vecX),sum.densityVector (vecX));

// print 10 random numbers

vec_print(sum.ranVector (10),"ran ");

}

catch (const LOPOR::Error& error) { error.information( ); }

return 0;

}

We have used the function included in Global.hpp ,

c2v < Template Type > (Type d1,

Type d2, …,dn)

which converts n elements

=(d1,d2,…,dn) in one

vector.

The program will create the file "Sum1.res" used to

plot the figure above and print on the screen:

# i= ran =

0 0.0512546

1 -0.247977

2 0.519449

3 0.879247

4 1.83828

5 1.11144

6 -0.582703

7 0.777123

8 1.10778

9 -0.0854141

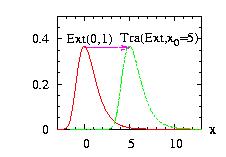

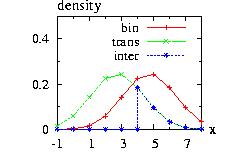

The class Translate.hpp allows you to translate the density

function by x0.

The class Translate.hpp allows you to translate the density

function by x0.

x → x+x0

then the new instance Translate(&distribution,x0)

can be used at an usual distribution.

In addition to the general

syntax, we have:

| void setParameter(Distribution* d, double x0); |

|

where Distribution* is the address of the distribution to transform. |

| void refresh( ); |

|

if the distribution (Extreme in our example) has changed, you should refresh the class. This is not done automatically because it is very time-consuming to check it at each call of ran( ). Moreover the call of refresh() calls the refresh( ) function of the distribution given as parameter. |

The program to generate the figure above could be:

// Example Translate1.cpp download

//

#include "LOPOR.hpp"

using namespace LOPOR;

int main( )

{

try

{

Extreme Ext;

Ext.setParameter(0,1);

Translate trans;

trans.setParameter(&Ext,5);

// vecX={-3, -2.99, -2.98,…, 12.99, 13}

std::vector<double> vecX(vec_create(1601,-3.,0.01));

// to create the figure above:

// print in file "Translate1.res", the vectors:

// i vecX density(Extreme) density(Translate)

// 0 -3 3.80054e-08 0

// 1 -2.99 4.59514e-08 0

// 2 -2.98 … …

vec_print("Translate1.res",vecX,Ext.densityVector(vecX),

trans.densityVector(vecX));

// print 10 random numbers

vec_print(trans.ranVector(10),"ran ");

}

catch (const LOPOR::Error& error) { error.information( ); }

return 0;

}

And the program will create the file "Translate1.res" used to plot the figure above and print on the screen:

# i= ran

0 7.26301

1 3.59437

2 4.54731

3 4.75202

4 5.19198

5 5.51945

6 4.84831

7 5.87925

8 6.13524

9 5.24774

You can construct a new distribution class fairly easily. The only necessary function should be the ran( ) function or the density( ) function. If you also define some other functions like the cumulative( ) function, you will also be able to use the Transformations-Sum classes. If you do not know a simple way to produce the random numbers from this distribution, you should use one of the rejection methods: Hasting or HeatBath. We will present now some possibilities.

In this section we will show how we have constructed

the Exponential class. The

density mass function is :

f(x)= exp(− x/a)/a

It consists in one declaration file Exponential.hpp and one other file

Exponential.cpp.

// download Exponential.hpp

#ifndef EXPONENTIAL_HPP

#define EXPONENTIAL_HPP

#include "Distribution.hpp"

namespace LOPOR

{

class Exponential : public Distribution{

public:

Exponential( );

~Exponential( ){};

virtual void setParameter(const double& a) ;

virtual void setParameters(const std::vector <double> & parameters);

virtual double density (const double& x) ;

virtual double cumulative (const double& x) ;

virtual double mean ( ) ;

virtual double mode ( ) ;

virtual double variance ( ) ;

virtual double ran_fc(const double& ran) ;

virtual Distribution* clone( ) ;

virtual std::vector <double> moments(const std::vector<double > & vecX);

virtual std::vector <double> mle(const std::vector<double > & vecX);

virtual std::vector <double> fit_cum(const double x

, std::vector<double > & coeff);

virtual std::vector <double> fit_den(const double x

, std::vector<double > & coeff);

private:

double A;

};

} // !namespace LOPOR

#endif /* EXPONENTIAL_HPP */

and the Exponential.cpp file:

// download Exponential.cpp

#include "Error.hpp"

#include "Vector.hpp"

#include "Exponential.hpp"

LOPOR::Exponential::Exponential( )

{

type="double";

name="Exponential";

fit_keep_den_LM=c2v <int> ( );

fit_keep_cum_LM=c2v <int> ( );

setParameter(1);

}

void LOPOR::Exponential::setParameters(const std::vector <double> & parameters)

{

int temp=1;

if(parameters.size( ) != temp) throw Error("LOPOR:"+name

+":setParameter(vector <double> parameters): parameters should have "

+c2s(temp)

+" elements or parameters.size( )="+c2s(parameters.size( )));

setParameter(parameters[0]);

}

void LOPOR::Exponential::setParameter(const double& a)

{

Parameters=c2v(a);

A=a;

if(a < 0) throw Error(information( )+"::setParameter( ) = > The variable:"

+ c2s(a) +" must be > = 0");

Ftot=1;

}

double LOPOR::Exponential::density(const double& x)

{

if(x < 0) return 0.;

return exp(-x/A)/A;

}

double LOPOR::Exponential::cumulative(const double& x)

{

if(x < 0) return 0.;

return 1.-exp(-x/A);

}

double LOPOR::Exponential::mean( )

{

return A;

}

double LOPOR::Exponential::mode( )

{

return 0.;

}

double LOPOR::Exponential::variance( )

{

return A*A;

}

double LOPOR::Exponential::ran_fc(const double& ran)

{

return -A*log(1-ran);

}

LOPOR::Distribution* LOPOR::Exponential::clone( )

{

Exponential* clone = new Exponential( );

*clone = *this;

return clone;

}

std::vector <double> LOPOR::Exponential::moments

(const std::vector<double > & vecX)

{

if(vecX.size( ) ==0) throw Error("LOPOR::"

+name+"::moments(vecX) : no data in VecX");

double mean=vec_mean(vecX);

std::vector <double> vec=c2v<double > (mean);

setParameters(vec);

return vec;

}

std::vector <double> LOPOR::Exponential::mle(const std::vector<double > & vecX)

{

if(vecX.size( ) ==0) throw Error("LOPOR::"+name+"::mle(vecX) : no data in VecX");

std::vector <double> vec=c2v<double > (vec_mean(vecX));

setParameters(vec);

return vec;

}

std::vector <double> LOPOR::Exponential::fit_cum(const double x,

std::vector<double > & coeff)

{

if(coeff.size( )!= Parameters.size( )) throw Error("LOPOR::"+name

+"::fit: the coeff.size( )="+c2s(coeff.size( ))

+"!= nb of parameters="+c2s(Parameters.size( )));

if(coeff!=Parameters) setParameters(coeff);

// Levemberg-Marquardt: derivatives+function

std::vector <double> lm(coeff.size( )+2);

lm[0]=-(x/(Power(A,2)*Power("E",x/A))); // derivative by coeff[0]

lm[1]= 1/(A*Power("E",x/A)); // derivative by x

lm[2]=cumulative(x); // function

return lm;

}

std::vector <double> LOPOR::Exponential::fit_den(const double x,

std::vector<double > & coeff)

{

if(coeff.size( )!= Parameters.size( )) throw Error("LOPOR::"+name

+"::fit: the coeff.size( )="+c2s(coeff.size( ))

+"!= nb of parameters="+c2s(Parameters.size( )));

if(coeff!=Parameters) setParameters(coeff);

// Levemberg-Marquardt: derivatives+density

std::vector <double> lm(coeff.size( )+2);

lm[0]=(-A + x)/Power(A,2)*density(x); // derivative by coeff[0]

lm[1]= -(1/A)*density(x); // derivative by x

lm[2]=density(x); // density

return lm;

}

Explanations:

The other functions defined in General Syntax Distribution

are available automatically.

We have defined the function ran_fc and all the other

ran functions of the General Syntax Distribution: ran( ),

ranVector, ranVectorLH,

are available automatically. However for some distributions it is impossible to

inverse and solve the equation F-1(x)=y. Two choices:

The discrete distribution classes follow the same procedure as the continuous distributions. There are three points worth to be noted :

The library uses distributions to exchange information between elements,

therefore it is sometimes better to have a distribution

instead of a function.

The class FunctionDistribution

provides it. Only the density function of the general

syntax is defined, and in addition we have:

| void setParameter(double func(const double& x)) |

|

define the function |

An example of program:

// Example FunctionDistribution.cpp

#include "LOPOR.hpp"

using namespace LOPOR;

double func(const double& x) { return 2.*x; }

int main( )

{

try

{

FunctionDistribution function;

function.setParameter(func);

print(function.density(3.));

}

catch (const Error& error) { error.information( ); }

return 0;

}

And the output of this program is:

6

The class Hasting.hpp allows you to produce a random number

generator for any

density function. It is not as good as the HeatBath

but a little bit easier to implement. For an introduction and

a comparison with the HeatBath method see

[Loison2004].

The main point is to simulate a complicated distribution

d1 using another distribution d2

easier to simulate, using a kind of rejection method.

Contrary to the HeatBath the d2

function f2 is not

necessarily bigger than the d1 function

f1. Therefore

any distribution d2 is a possible candidate.

However the closest f2 is of f1 the

more efficient the algorithm will be. The only restriction

for the choice of f2 is that it must not be zero

if f1 is not zero.

The class Hasting.hpp allows you to produce a random number

generator for any

density function. It is not as good as the HeatBath

but a little bit easier to implement. For an introduction and

a comparison with the HeatBath method see

[Loison2004].

The main point is to simulate a complicated distribution

d1 using another distribution d2

easier to simulate, using a kind of rejection method.

Contrary to the HeatBath the d2

function f2 is not

necessarily bigger than the d1 function

f1. Therefore

any distribution d2 is a possible candidate.

However the closest f2 is of f1 the

more efficient the algorithm will be. The only restriction

for the choice of f2 is that it must not be zero

if f1 is not zero.

The new instance

Hasting(&distribution1,&distribution2)

can be used at an usual distribution. This method in combination with

the StepFunction class is the fastest method if the

ran_fc( ) of the distribution

d1 is unknown

[Loison2004].

If the distribution function f2 is constant, we get the

Metropolis algorithm.

If the distribution d2 is

the UniformDelta

distribution we

get the Restricted Metropolis procedure. This last procedure

must be used when the form of the distribution d1

is too wide to define an efficient function f2

[Loison2004].

In addition to the general

syntax, we have:

| void setParameter(Distribution* d1, Distribution* d2, double xini); |

|

The Distribution* d1

is the address of the distribution that we are interested in. |

|

double successPerCent( ); |

|

return the % of success of the calls for ran( ) and ranVector( ) functions of the new Hasting instance. |

| void refresh( ); |

|

if the distribution (Gamma in our example) has changed, you should refresh the class. This is not done automatically because it is very time-consuming to check it at each all of ran( ) call. Moreover the call of refresh() calls the refresh( ) function of the distributions given as parameter. |

Fit

All fit functions described in Fit are accessible.

The program to

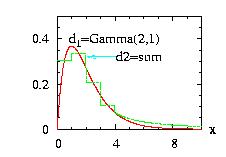

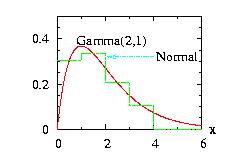

generate the figure above could be:

// Example Hasting1.cpp download

//

// Objective: have a random number generator for

// the Gamma class if we admit that we do not know

// how to implement it directly.

//

#include "LOPOR.hpp"

using namespace LOPOR;

int main( )

{

try

{

// The class which we do not know (!) the ran( ) function

Gamma Gam;

Gam.setParameter(2,1);

// Construct of the distribution to simulate Gamma

// The density should be as near as possible of the

// distribution studied (here Gamma)

//

// 1. For x between 0 and 4: a StepFunction with 5-1=4 steps

StepFunction Ste;

Ste.setParameter(&Gam,0,4,5);

// 2. For x > 4 : A Pareto fc: the class Interval

// with border=2 (last parameter) : [4,+oo[

Pareto Par;

Par.setParameter(1,16*Gam.density(4));

Interval Int;

Int.setParameter(&Par,4,11,2);

// 3. Sum of the two functions:

Sum sum;

sum.setParameter(c2v <Distribution*> (&Ste,&Int));

// The instance Has can be used as an instance of the Gamma class

Hasting Has;

Has.setParameter(&Gam,&sum,1);

// vecX={0, 9.99, 10}

std::vector<double> vecX(vec_create(1001,0.,0.01));

// to create the figure above:

// print in file "Hasting1.res", the vectors:

// i x Hasting=Gamma Sum

vec_print("Hasting1.res",vecX,Has.densityVector(vecX),

sum.densityVector(vecX));

// print 10 random numbers from the Gamma distribution

// through the Hasting instance

vec_print(Has.ranVector(10),"ran for Gamma ");

}

catch (const Error& error) { error.information( ); }

return 0;

}

And the program will create the file "Hasting1.res" used to plot the figure above) and print on the screen:

# i= ran for Gamma

0 2.74109

1 7.38208

2 4.53815

3 0.61207

4 0.68343

5 1.38852

6 4.41928

7 4.99977

8 1.70519

9 1.70519

The class HeatBath.hpp allows you to produce a random number

generator for any

density function. This method is known generally as the

"rejection method". It is a little better than the Hasting

procedure,

but a little bit more difficult to implement. For an introduction and

a comparison with the Hasting method see

[Loison2004].

The main point is to simulate a complicated distribution

d1 using another distribution d2

which is easier to simulate, using a kind of rejection method.

Contrary to the Hasting method the d2

function f2 must be

bigger than the d1 function

f1.

The closest f2 is of f1 the

more efficient the algorithm will be.

Then the new instance,

HeatBath(&distribution1,&distribution2),

can be used at an usual distribution. This method in combination with

the StepFunction class is the fastest method if the

ran_fc( ) of the distribution

d1is unknown

[Loison2004].

The class HeatBath.hpp allows you to produce a random number

generator for any

density function. This method is known generally as the

"rejection method". It is a little better than the Hasting

procedure,

but a little bit more difficult to implement. For an introduction and

a comparison with the Hasting method see

[Loison2004].

The main point is to simulate a complicated distribution

d1 using another distribution d2

which is easier to simulate, using a kind of rejection method.

Contrary to the Hasting method the d2

function f2 must be

bigger than the d1 function

f1.

The closest f2 is of f1 the

more efficient the algorithm will be.

Then the new instance,

HeatBath(&distribution1,&distribution2),

can be used at an usual distribution. This method in combination with

the StepFunction class is the fastest method if the

ran_fc( ) of the distribution

d1is unknown

[Loison2004].

In addition to the general

syntax, we have:

| void setParameter(Distribution* d1, Distribution* d2); |

|

The Distribution* d1

is the address of the distribution that we are interested in. |

|

double successPerCent( ); |

|

return the % of success of the calls for ran( ) and ranVector( ) functions of the new HeatBath instance. |

| void refresh( ); |

|

if the distribution (Gamma in our example) has changed, you should refresh the class. This is not done automatically because it is very time-consuming to check it at each all of ran( ) call. Moreover the call of refresh() calls the refresh( ) function of the distributions given as parameter. |

Fit

All fit functions described in Fit are accessible.

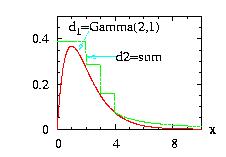

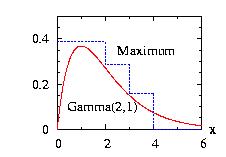

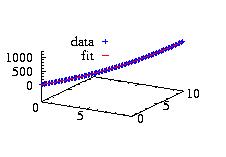

The program to

generate the figure above could be:

// Example HeatBath1.cpp download

//

// Objective: have a random number generator for

// the Gamma class if we admit that we do not know

// how to implement it directly.

//

#include "LOPOR.hpp"

using namespace LOPOR;

int main( )

{

try

{

// The class which we do not know (!) the ran( ) function

Gamma Gam;

Gam.setParameter(2,1);

// Construct of the distribution to simulate Gamma

// The density should be as near as possible of the

// distribution studied (here Gamma)

//

// 1. For x between 0 and 4: a StepFunction with 5-1=4 steps

// "Maximum": the step function is higher that the Gamma

StepFunction Ste;

Ste.setParameter(&Gam,0,4,5,"Maximum");

// 2. For x > 4 : A Pareto fc: the class Interval

// with border=2 (last parameter) : [4,+oo[

Pareto Par;

Par.setParameter(1,16*Gam.density(4));

Interval Int;

Int.setParameter(&Par,4,11,2);

// 3. Sum of the two functions:

Sum sum;

sum.setParameter(c2v <Distribution*> (&Ste,&Int));

// The instance HB can be used as an instance of the Gamma class

HeatBath HB;

HB.setParameter(&Gam,&sum);

// vecX={0, 9.99, 10}

std::vector<double> vecX(vec_create(1001,0.,0.01));

// to create the figure above:

// print in file "HeatBath1.res", the vectors:

// i x HeatBath=Gamma Sum

vec_print("HeatBath1.res",vecX,HB.densityVector(vecX),

sum.densityVector(vecX));

// print 10 random numbers from the Gamma distribution

// through the HeatBath instance

vec_print(HB.ranVector(10),"ran for Gamma ");

}

catch (const Error& error) { error.information( ); }

return 0;

}

And the program will create the file "Hasting1.res" used to plot the figure above and print on the screen:

# i= ran for Gamma

0 1.95226

1 0.667258

2 0.324795

3 1.43791

4 0.685709

5 0.616973

6 3.57849

7 0.563728

8 2.43355

9 2.14097

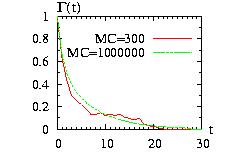

The MetropolisRestricted is related to the Hasting class,

however it is not based on a distribution. It allow the users

to generate random numbers from a multivariate distribution g. It consists

to create a Markov chain updating each variables consecutively. This is done at follow:

1. From a configuration {x0,x1,…} create a new configuration:

{x0new,x1,…} using

x0new=x0 ± δ0

with delta fixed at the beginning of the simulation.

2. Accept this new configuration with the probability g(new)/g(old)

Then try to updated the second variable, then the third, …

For more information see [Loison2004].

One flaw of this method is that the random number will be correlated and

a careful analysis should be done to measure the correlation using

the Autocorrelation function. An example is given

here. Moreover we need a certain number of step at the

beginning of the procedure to reach a configuration in equilibrium.

| void setParameter(double function(const vector<double>&), vector<double> x_ini,vector<double> delta_ini,int MC_eq=1000,int keep_data=1 ); |

|

The function we are interested in. |

|

vector<double> successPerCent( ); |

|

return the % of success of the procedure for each variable. |

|

vector<double> ran( ); |

|

return a vector composed of random number for each variable. |

|

vector<vector<double> > ranVector(int MC); |

|

return MC vectors, each composed of random numbers for each variable. |

An example of program can be found here.

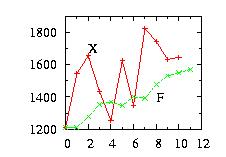

The StepFunction class is based on the Histogram class.

The user must give the {xi} coordinates and

the distribution d to be approximated, the class calculate the

probabilities {pi}. There are two options: the

StepFunction function f can be always bigger than the

distribution function, or the {pi} are calculated

using the middle of each [xi,xi+1]

interval. This class is very powerful in combination

with the HeatBath and the

Hasting classes. If the number of

step increases the function will be approximated better but

the time consumption will not necessary decrease because

more memory is needed to store the data

[Loison2004].

Hundreds steps of should be a maximum.

| class: | StepFunction.hpp |

| density: |

two choices:

|

| restrictions: |

n ≥ 2 pi ≥ 0 and at least one pi ≠ 0, there are n−1 probabilities pi xi < xi+1, there are n values xi |

| domain: | x0 ≤ x ≤ xn−1 |

| cumulative: | |

| mean: | |

| mode: | no closed form |

| variance: |

In addition to the general syntax, we have:

| void setParameter( Distribution* d, vector <double> x, string name_type, vector <double> vecMax); |

|

d is the distribution to

approximate |

| void setParameter( Distribution* d, double xmin, double xmax, int n, string name_type, vector <double> vecMax); |

|

The difference between the setParameter above is that the vector x is calculated by the class. You should give the xminimum, the xmaximum and the number of interval+1= n |

| vector <double> get_X( ) |

|

return the vector x |

| void change_X(vector <double> x) |

|

if the vector x calculated by the class does not fit your needs. Similar as redoing a setParameter( ). |

| vector <double> get_P( ) |

|

return the vector p (probabilities) |

| void change_P(vector <double> p) |

|

if the vector p calculated by the class does not fit your needs. |

| void normalize( ) |

|

Normalize the distribution, i.e. ∫x[o]x[end] density = 1 |

| int get_i ( double x ) |

|

return the number of the interval (0 to n−1) corresponding to the value x. |

|

vector <double> E.ranVectorLH(int n); double E.ran_fc(double y); |

|

not defined |

Fit No fit functions described in Fit are accessible.

Programs to generate the figures above are Hasting1.cpp for the first figure and HeatBath1.cpp for the second figure.

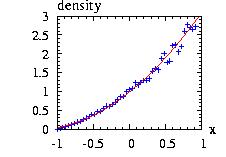

The class Walker.hpp is not based on a Distribution

class. You should use only the functions listed below.

This class is used in some distribution classes to

accelerate the simulations.

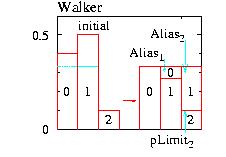

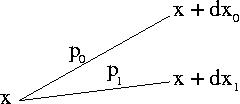

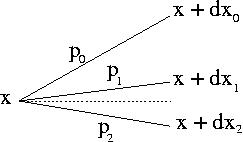

Walker's alias method handles in an economic way which new

state to choose among n possibilities.

The probabilities pi for a new state i

are stored in n different boxes of equal

height ∑pi/n.

Walker's construction has in each box only

one or two different probabilities. For an example with

n=3 see the figure. Before the simulation

starts one must have calculated and stored the probabilities

pLimiti which divides each box i. The upper

states in each box must also be stored in an array. These

states as ``subtenants'' have an ``alias'' whereas the

lower ones have the box number as correct address for the

state i.

The implementation has the following steps:

The class Walker.hpp is not based on a Distribution

class. You should use only the functions listed below.

This class is used in some distribution classes to

accelerate the simulations.

Walker's alias method handles in an economic way which new

state to choose among n possibilities.

The probabilities pi for a new state i

are stored in n different boxes of equal

height ∑pi/n.

Walker's construction has in each box only

one or two different probabilities. For an example with

n=3 see the figure. Before the simulation

starts one must have calculated and stored the probabilities

pLimiti which divides each box i. The upper

states in each box must also be stored in an array. These

states as ``subtenants'' have an ``alias'' whereas the

lower ones have the box number as correct address for the

state i.

The implementation has the following steps:

The time consumption is therefore independent on the number of states. The only limitation is the memory needed to store the arrays. The method to generate the arrays can be found in the Peterson1979. The syntax :

| void setParameter(vector <double> probabilities); |

|

where probabilities={p0,p1, …,pn−1} |

| double ran( ); |

|

return a random number following the probabilities distribution |

| double ran(double ra ); |

|

return a random number following the probabilities distribution and a new random number (uniform distribution between 0 and 1) ra which is calculated during the walker procedure |

| vector <double> ranVector(int n); |

|

return n random numbers following the probabilities distribution |

| vector <double> ranVector(int n, vector <double> ranVec); |

|

return n random numbers following the probabilities distribution and n random numbers (uniform distribution between 0 and 1) ranVec which are calculated during the walker procedure |

| vector <double> ranVectorLH(int n); |

|

return n random numbers following the probabilities distribution, using the Latin Hypercube sampling. Give a better result than ranVector(n), but you must be cautious when using it: all the random numbers must be used to calculate the integrals. |

| vector <double> ranVectorLH(int n, vector <double> ranVec); |

|

Same as previous line, but return also n random numbers (uniform distribution between 0 and 1) ranVec which are calculated during the walker procedure |

| vector <double> cumulativeVector(double Ftot); |

|

return the cumulative for all {i} as

a vector: cumulativeVector

={F0,

F1, … ,Fn−1}

={p0/Ftot,(p0+p1)/Ftot,

… ,1}. |

The library uses distributions to exchange information between elements,

therefore it is sometimes better to have a distribution

instead of a function. We have defined several function on the form of

a Distribution.

If you want to transform

a function in a distribution form you should use the class

FunctionDistribution defined thereafter.

Only the density of the

function is defined. You cannot use directly an instance of the distribution

function with the ran() function. If you need a random generator you should

use in combination the Hasting class or the

HeatBath class.

For the predefined distribution functions

(Exponential_fc ,

Laguerre_fc, …) the

fit_den function is defined and therefore you can use

the Levemberg-Marquardt method to fit parameters using

the Fit::LeastSquares_LM_den static function.

The class FunctionDistribution

transform a static function in an instance of

Distribution.

Only the density function of the general

syntax is defined, and in addition we have:

| void setParameter(double func(const double& x)) |

|

define the function |

An example of program:

// Example FunctionDistribution.cpp

#include "LOPOR.hpp"

using namespace LOPOR;

double func(const double& x) { return 2.*x; }

int main( )

{

try

{

FunctionDistribution function;

function.setParameter(func);

print(function.density(3.));

}

catch (const Error& error) { error.information( ); }

return 0;

}

And the output of this program is:

6

The Exponential_fc class defined the function

f(x) = B e A x

Only the density function of the general

syntax is defined, and in addition we have:

| void setParameter(A,B) |

|

void

setParameters(vector<double> parameters)

|

|

with parameters={A,B} |

The fit function Fit::LeastSquares_LM_den can be used with this class.

The Laguerre_fc class defined the function

f(x) = e−x/2 ∑n=0N an Ln(x)

Ln(x) = ex/n! dn/dxn (Xn e−x)

Be aware that we have add the exponential factor in front of the standard

Laguerre polynomial functions. We have:

L0(x) = 1

L1(x) = 1 − x

L2(x) = 1 − 2x + x2/2

Ln(x) = (2n − 1 − x)/n Ln−1

− (n − 1)/n Ln−2

Only the density function of the general

syntax is defined, and in addition we have:

|

void

setParameters(vector<double> parameters)

|

|

parameters={a0,a1,…} |

The fit function Fit::LeastSquares_LM_den

can be used with this class. Example of program:

Laguerre_fc laguerre; // define instance

laguerre.setParameters(c2v(0.5,0.2,1.)); // three first Laguerre fc

std::vector<double> X,Y; // create data for fit

for(double x=0; x<10; x += 0.1)

{

X.push_back(x);

Y.push_back(laguerre.density(x));

}

print("data from:",laguerre.information()); // display information

laguerre.setParameters(c2v(0.7,0.4,0.9)); // change parameters

print("before fit:",laguerre.information());

Fit::LeastSquares_LM_den(&laguerre,X,Y); // Fit

print("after fit:",laguerre.information());

And the output is:

data from: LOPOR::Laguerre_fc(0.5,0.2,1)

before fit: LOPOR::Laguerre_fc(0.7,0.4,0.9)

after fit: LOPOR::Laguerre_fc(0.5,0.2,1)

The Linear_fc class defined the function

f(x) = A + B * x

Only the density function of the general

syntax is defined, and in addition we have:

| void setParameter(A,B) |

|

void

setParameters(vector<double> parameters)

|

|

with parameters={A,B} |

The fit function Fit::LeastSquares_LM_den can be used with this class.

The Polynome_fc class defined the function

f(x) = ∑i=0N ai xi

f(x) = a0 + a1 x + a2 x2 + …

Only the density function of the general

syntax is defined, and in addition we have:

|

void

setParameters(vector<double> parameters) |

|

parameters={a0,a1,…} |

|

void setParameter(int degree) |

|

f(x) = 1 + x + x2 + … + xdegree |

Example:

Polynome_fc polynome;

polynome.setParameters(c2v(1.,1.5,1.));

print(polynome.information());

And the output is:

LOPOR::Polynome_fc( 1*x^0 + 1.5*x^1 + 1 x^2 )

The fit function Fit::LeastSquares_Linear_den

can be used with this class. We give thereafter the function

fit_den_linear used by this Fit function. It returns

a vector {x0,x1,…}

std::vector<double> LOPOR::Polynome_fc::fit_den_linear(const double x)

{

std::vector<double> lm(Parameters.size());

if(Parameters.size()>=1) lm[0]=1;

for(int i=1; i<Parameters.size(); ++i)

lm[i]=lm[i-1]*x;

return lm;

}

There are three main methods for generating multivariate random

vectors of n elements each.

The first is the acceptance/rejection method, the second

the conditional distribution, and the third the partially-specified-properties

transformation.

The acceptance/rejection method is mainly use in one dimension. For example

the class StepFunction use this method. There

are several problems:

First we need to know the exact form of the distribution

function f and not only the correlation matrix.

Second we have to define a function g which is always bigger

than the f, g ≥ f, that we know the inverse of the cumulative function

G−1. It is usually very difficult to find a correct function,

in particular if f has a lot of maximum and if we are in high dimension.

We can use the StepFunction

in two dimension [Loison2004] but in higher dimension

the memory needed increases exponentially.

The second method is to produce iteratively the elements: the first without

constraint, the second random number with the constraint with the first

distribution, the third with the constraint on the first and the

second distribution, … This procedure becomes very cumbersome

and almost impracticable for all but the normal distribution

NormalMulti and

NormalMultiPCA.

The third method is used by the NORTA algorithm and

is very powerful.

The class NormalMulti.hpp is not based on the Distribution

class.

The probability density function is:

f(x) = (2 π)−n/2 |Σ|1/2

exp[

− (x − μ)T Σ−1 (x − μ) / 2 )

]

with Σ is the variance-covariance matrix,

x={x1,x2,…xn} and

μ={μ1,μ2,…μn}

are a two vectors of n elements. T means transposed

A way to generate the vector x is to construct a vector z of n Normal random numbers

and to use:

x = MT z + μ

with the condition that MT M = Σ.

We use this method with a Cholesky

decomposition which gives the matrix

MT under the form of a lower diagonal matrix with 0

on the upper diagonal part. The class NormalMulti.hpp

provides these functions:

| void setParameter(vector<double> μ, vector<vector<double> > Σ) | ||||||||||||||||||||

|

μ = {μ1, μ2, …, μn}

|

||||||||||||||||||||

|

void setParameter(vector<double> μ, vector<double> σ, vector<vector<double> > Σ') void setParameter(vector<vector<double> > Σ') |

||||||||||||||||||||

|

μ = {μ1, μ2, …, μn}, if not given all μi=0

|

||||||||||||||||||||

| vector<double> ran( ) | ||||||||||||||||||||

|

return a vector of n normal random numbers correlated through the correlation matrix Σ |

||||||||||||||||||||

| vector<vector<double> > ranVector(int L ) | ||||||||||||||||||||

|

return a matrix of L vectors of n normal random numbers correlated through the correlation matrix Σ |

Example of program:

// Example NormalMulti.cpp

// call L*2 normal random numbers correlated

// plot the histogram to check that both variables

// follows a Normal distribution

#include "LOPOR.hpp"

using namespace LOPOR;

int main( )

{

try

{

// correlation matrix Sigma':

std::vector<std::vector<double> > correlations;

correlations=c2m(c2v(1.,0.6),c2v(0.6,1.));

// mean vector

std::vector<double> mean(c2v(1.,2.));

// sigma vector

std::vector<double> sigma(c2v(1.,2.));

// create instance

NormalMulti normalMulti;

normalMulti.setParameter(mean,sigma,correlations);

// results

std::vector<std::vector<double> > matrix_res;

// matrix_res={ {a0,b0}, {a1,b1}, …, {aL,bL} }

matrix_res=normalMulti.ranVector(100000);

// matrix_res={ {a0,a1,a2…,aL} , {b0,b1,b2,…,bL} }

matrix_res=matrix_transposed(matrix_res);

// check correlation

print("correlations a.b=",

vec_mean(vec_multiply(

vec_add(matrix_res[0],-mean[0]),

vec_add(matrix_res[1],-mean[1])

))

, ", exact=",correlations[0][1]*sigma[0]*sigma[1]);

// Construct histogram on [-5:10] with 100 bins with normalization

// and print in files

vec_histogram_print("NormalMulti0.res",matrix_res[0],-5,10,100);

vec_histogram_print("NormalMulti1.res",matrix_res[1],-5,10,100);

}

catch (const Error& error) { error.information( ); }

return 0;

And the output is:

correlations a.b= 1.18792 , exact= 1.2

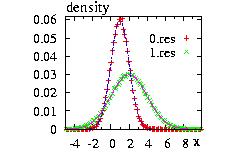

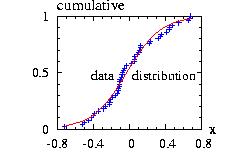

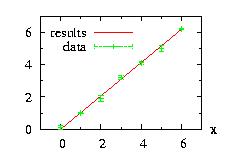

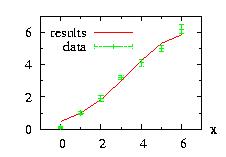

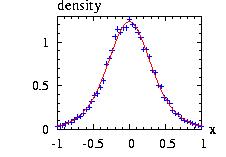

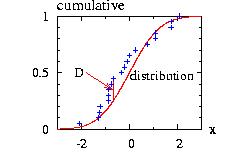

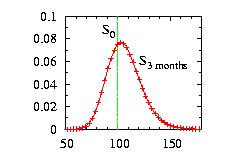

Columns 3 as function of the column 2 of the files

"NormalMulti0.res" and "NormalMulti1.res" and the densities

e-(x-mean)2/(2 σ2)

to check the results.

The class NormalMultiPCA.hpp is not based on the Distribution

class.

The probability density function is:

f(x) = (2 π)−n/2 |Σ|1/2

exp[

− (x − μ)T Σ−1 (x − μ) / 2 )

]

with Σ is the variance-covariance matrix,

x={x1,x2,…xn} and

μ={μ1,μ2,…μn}

are a two vectors of n elements. T means transposed

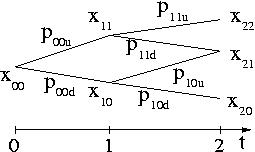

We first diagonalize Σ = Γ Λ ΓT